Systems are an arrangement of parts or elements that together exhibit behavior or meaning that the individual constituents do not.[1]

"In ordinary language, we often call something complex when we can't fully understand it."[2]

That is to say, when it is:

- Uncertain

- Unpredictable

- Complicated

- Just plain difficult

A complex system then could be described as a set of interrelated and difficult to understand:

- Components

- Services

- Interfaces

- Interactions (including those with humans and the environment)

Considering that, how do we make systems that are inherently complex more understandable?

For an adequately large company, its associated systems and subsystems can be defined as a complex system. When those systems need to be maintained, repaired, upgraded, and/or updated, or enabling changes made to alter the way we interact with them, it may require:

-

A total system view

(usually a defined system context and model) -

Objectives and needs analyses

(a teleological analysis of a system) -

Requirements analyses

(including stakeholder, business, and system requirements definition) -

Configuration management process

(usually involving DevOps, SecOps, git, design control, environment management [like dev/test and blue/green deployments] and test & verification processes) -

Interface Management process

(usually involving implementing and documenting complex technologies with contextual ontological mapping, such as an Enterprise Serial Bus) -

RMA analyses

(reliability, maintainability, and availability, i.e. how often does it break, who can fix it, and how easy and cost effective is it to fix) -

Observability processes and technology

(often, implementing methodologies such as MELT - Metric, Events, Logs, and Traces) -

Producibility analyses

(can it easily be produced and implemented elsewhere? Does a local supply chain support it? Does open source support it or a third-party company? Big example in information systems is Infrastructure as Code [IaC] - such as Terraform) -

Trade analyses

(considering the predecessor, R&D, new innovations in the field, strategic advantage, and future valuations, which implementations and technologies are the best fit) - Even more, such as FMEA (failure modes and effects analyses), risk analyses, modeling and simulation, cybersecurity analysis, data governance and enterprise architecture considerations, regulatory, etc…

Complex systems can:

- Be tricky to understand.

- Lead to emergent behaviors and unknown system dynamics.

- Lead to 'Butterfly Effects' with widespread changes when systems are replaced.

When looking at 'systems of systems' (SoS), emergent behaviors and effects can be tricky to identify and quantify ahead of time. It's useful to compare a proposed digital transformation against a 'total systems view' by practicing enterprise systems engineering and architecture.

Tackling Domain-Associated Bias

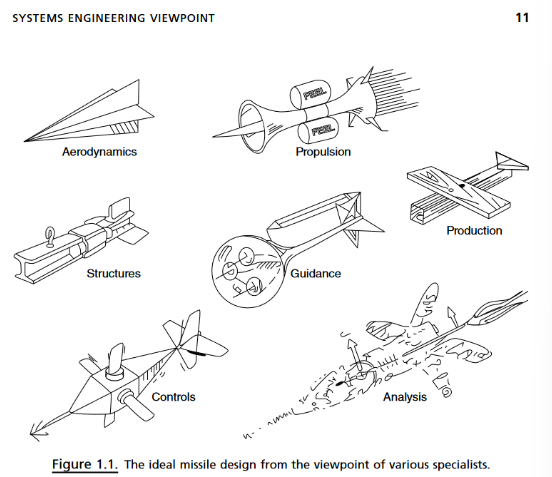

Also adding to the complexity of implementing proposed digital transformations is the bias introduced into the perspective of those from different backgrounds and fields that will inevitably be providing input into the project. Integrated, cross-functional development teams can provide a lot of useful perspectives, but often times translating between the various fields of knowledge or establishing common terms can be very challenging. Systems engineers are great candidates for ensuring that many different perspectives can be integrated, as they tend to have a very wide breadth and significant depth of knowledge across various domains.

Although traditional systems engineers (of the aerospace, vehicular, weapons, etc. systems variety) may not have a background in business, it is important for those systems engineers practicing enterprise systems engineering to have foundational to intermediate knowledge in business domains. this might mean education in accounting, finance, information technology and systems, management, human resources, marketing, and more.

Luckily, many industrial and systems engineering programs and professionals get curriculum that involves many of these topics, especially in the context of systems optimization. For example, both industrial engineers and cost accountants are concerned with cost optimization problems and implement models using methods like Theory of Constraints (ToC) and Activity-Based Costing (ABC). Additionally, most systems and industrial engineers are familiar with concepts such as value engineering, lean and agile process methodologies, common systems development lifecycles, and quality management systems.

To illustrate the importance of integrating domain perspectives, imagine an integrated development team for a building system, that involve architects, HVAC engineers, electrical engineers, civil engineers, construction planners, project managers, procurement professionals, marketing professionals, and more. Architects may have a primary perspective of design, utility, space, and abstraction. HVAC engineers may be concerned with thermal aspects of the building and interior spaces. Electrical engineers will think about the building in terms of the power draw of the technologies inside, and where wiring may need to be run. Civil engineers will be concerned with the soil under the building, the type of foundation, and the above-ground materials needed to construct the building envelope, considering the weight that must be supported. Planners and project managers will be concerned with timelines and quality related to service contracts, and procurement professionals will be worried about material lead times, local supply chains, and the overall producibility of the building depending on its location and the economic environment it's built in.

A professional from any individual domain is going to have a different primary concern for the system, that may not consider or even overlap with the perspectives of others. This is where the systems engineering profession shines and can help integrate knowledge from various implementation specialists into the plans, schematic models, and processes of the system from conception through design, implementation, operation, maintenance, and disposal without bias and while maintaining a total-system view. They'll also be able to maintain multiple viewpoints that capture the contextual ontologies maintained within each domain, with an additional layer of context of the objectives, needs, and goals of the whole system.

The above figure[3] from Kossiakof et al.'s Systems Engineering Principles and Practice, 3rd Edition highlights this issue very well.

Gall's Law and Gall's Inverse

In 1975, John Gall, faculty of the University of Michigan, published his systems research into the book Systemantics: How Systems Work and Especially How They Fail. The most recent edition of this book, published in 2002, is titled The Systems Bible. Many subsequent scholars have referenced, quoted, and found influence from the rules and principles provided in this literature. This includes many who made great contributions to systems and software engineering research and field: Ken Orr[4] (one of the creators of the Warnier/Orr diagram), Grady Booch[5] (one of the creators of UML, the unified modeling language), and many more.

The rule presented in Systemantics: How Systems Work and Especially How They Fail that is famous in systems thinking is referred to colloquially as ‘Gall’s Law’, although it isn’t called this in the book. Galls Law refers to this excerpt from page 71:

“A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and cannot be patched up to make it work. You have to start over with a working simple system.”[6]

The first sentence refers to Gall’s Law, and the second and third refer to what is often called ‘Gall’s Inverse’. When we look at complexity in systems and read case studies related to failed systems and implementations, we will often see complexity and unknown variables as the cause for the failure. Gall’s law works because of what we stated about complexity before – it inherently comes with uncertainty, is unpredictable, is complicated, and is plainly just difficult to deal with. The best way to manage complexity is to avoid starting out with complex system designs. As you’ll see as we move forward, the best way to avoid complex system designs for enterprise information systems is to reduce the number of system elements, interfaces, and integrations we need to manage.

There are various other frameworks that make similar points which are worth learning about, but in specific relation to decision making and decision support systems rather than purely to systems design. One introduced to me by my colleague Andrew Stearns is the Cynefin framework. Created by Dave Snowden, this framework breaks down decision-making context into five domains – clear, complicated, complex, chaotic, and confusion.

It is my hypothesis that Gall’s Law is effective because it allows one to start from a place of understanding, and as it is extended it allows for incremental analysis of emergent behavior, adding an element of predictability and a chance to roll back changes to a working and well understood product with each forward moving iteration. Take for example, a medical study related to weight loss. If you had each participant change their diet, exercise, and take supplements – if an effect was found, how would you be able to attribute it easily to any of those three variables? It would be difficult. A good study would try to find a way to isolate and eventually provide for various permutations of the study variables to determine their individual effect and contribution, using the well-known effects of each as a basis for developing hypotheses for the next step. Gall’s law works in this same way – through simplicity, we can know and understand effects of individual variables, and then analyze emergent changes with the introduction of each new variable (system element, interface, etc.).

INCOSE (2019) Systems Engineering and System Definitions

[2]

INCOSE (2015) A Complexity Primer for Systems Engineering White Paper

[3]

A. Kossiakoff et al. (2020) Systems Engineering Principles and Practice, 3rd Edition, p. 11, Figure 1.1

[4]

Ken Orr (1981) Structured requirements definition

[5]

Grady Booch (1991) Object oriented design with applications p.11. and 1994, 1997, 2007, 2009

[6]

John Gall (1975) Systemantics: How Systems Really Work and How They Fail p. 71